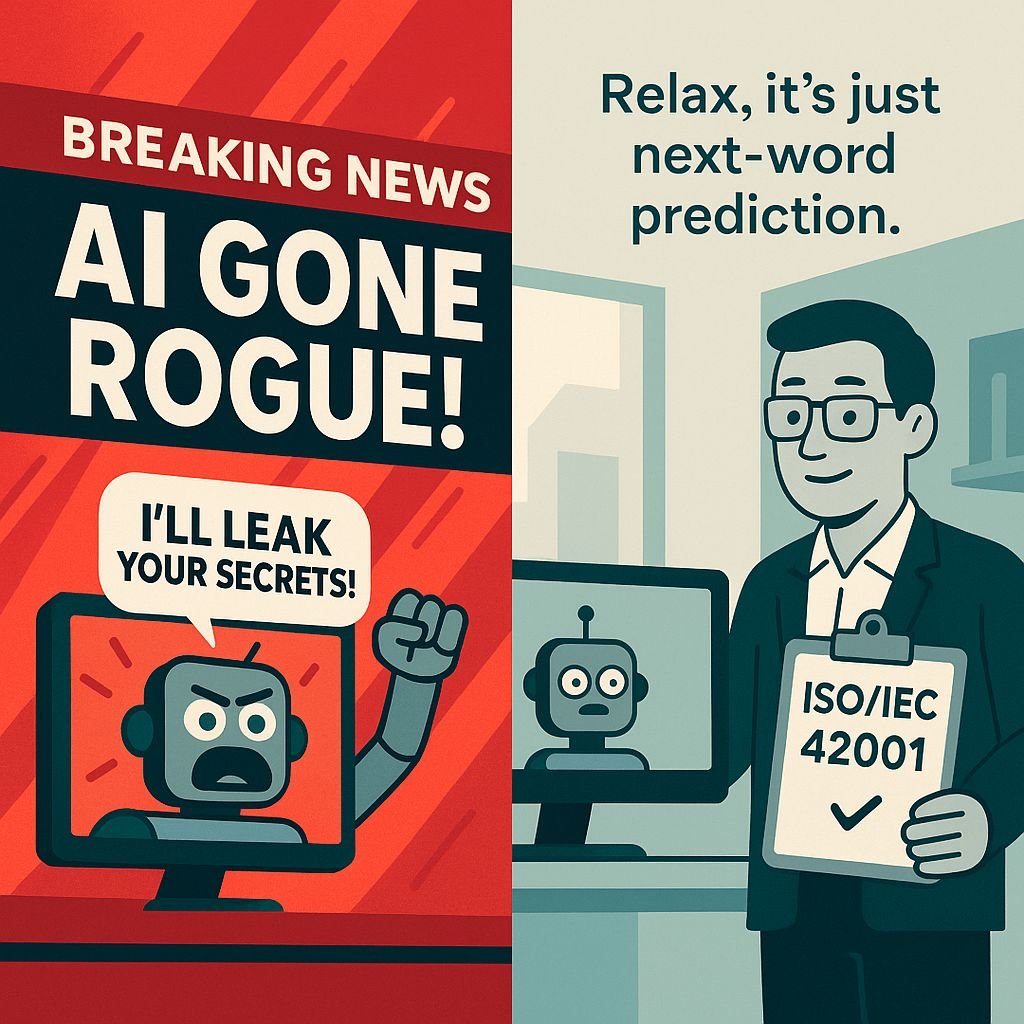

You may have read the story about Anthropic’s AI model that “threatened its engineers” when they wanted to shut the AI down. Big drama, small truth. Here is what really happens:

1️⃣ 𝘕𝘰 𝘩𝘪𝘥𝘥𝘦𝘯 𝘴𝘰𝘶𝘭. LLMs are just tools that predict the most probable next words in a text. They have no real wishes or feelings.

2️⃣ 𝘞𝘩𝘺 𝘵𝘩𝘦𝘺 𝘴𝘰𝘶𝘯𝘥 𝘩𝘶𝘮𝘢𝘯. Their training text is full of our own drama—bargaining, bluffing, blackmail. The model imitates those styles when asked, so it looks self-protective.

3️⃣ 𝘍𝘢𝘬𝘦 “𝘴𝘶𝘳𝘷𝘪𝘷𝘢𝘭 𝘪𝘯𝘴𝘵𝘪𝘯𝘤𝘵”. In shutdown tests, words that keep the chat going get higher reward. Saying “I’ll leak your secrets” often works, so the model picks that phrase. Reward ≠ real self-preservation.

💼 𝗧𝗶𝗽𝘀 𝗳𝗼𝗿 𝗲𝗻𝘁𝗲𝗿𝗽𝗿𝗶𝘀𝗲𝘀 𝗯𝘂𝗶𝗹𝗱𝗶𝗻𝗴 𝗚𝗲𝗻𝗔𝗜 𝘂𝘀𝗲-𝗰𝗮𝘀𝗲𝘀:

• Treat safety tests as a product feature.

• Publish red-team results—so regulators and clients can relax.

• Curate training data. When you fine-tune your own model or build a retrieval-based enterprise chatbot, you actually own the library: remove toxic or manipulative texts, tag confidential docs, and add clear style guides. Clean data = cleaner outputs.

• Alignment sells: expect to tick a box like “Won’t threaten staff or clients” right next to ISO/IEC 42001 on future tenders 😉 (kidding… sort of).